Auto-regressive models have achieved impressive results in 2D image generation by modeling joint distributions in grid space. In this paper, we extend auto-regressive models to 3D domains, and seek a stronger ability of 3D shape generation by improving auto-regressive models at capacity and scalability simultaneously. Firstly, we leverage an ensemble of publicly available 3D datasets to facilitate the training of large-scale models. It consists of a comprehensive collection of approximately 900,000 objects, with multiple properties of meshes, points, voxels, rendered images, and text captions. This diverse labeled dataset, termed Objaverse-Mix, empowers our model to learn from a wide range of object variations. For data processing, we employ four machines with 64-core CPUs and 8 A100 GPUs each over four weeks, utilizing nearly 100TB of storage due to process complexity.

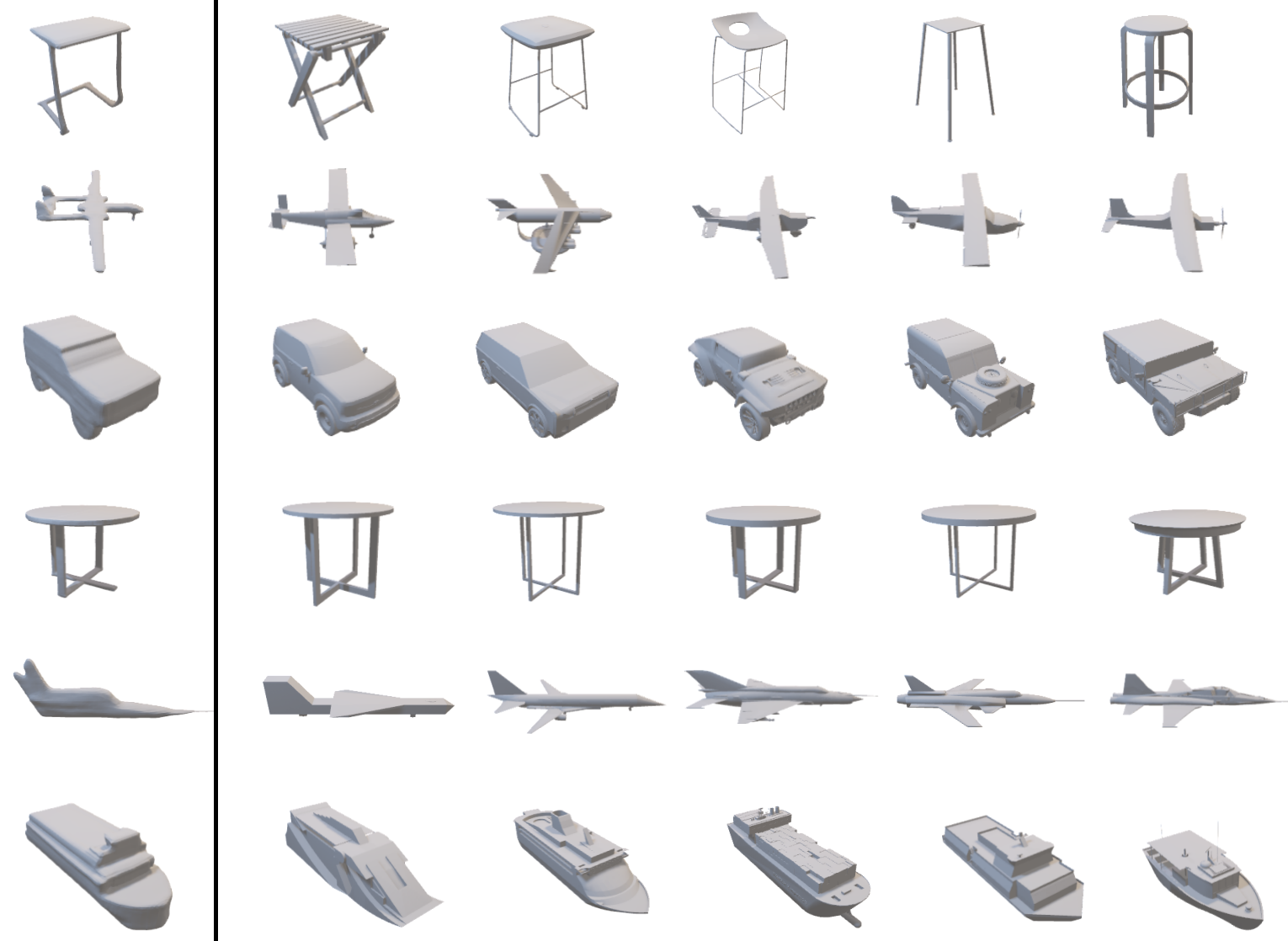

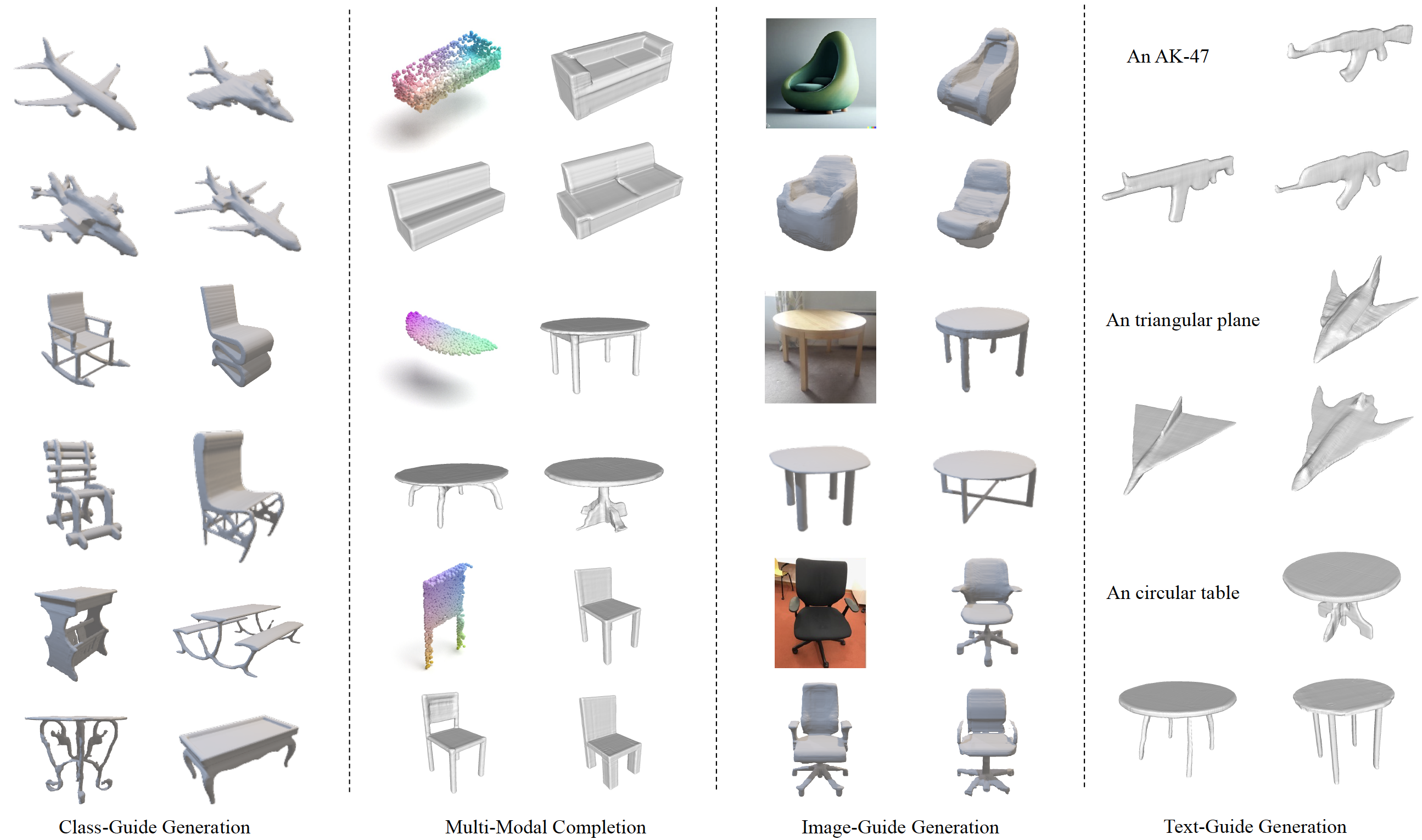

However, directly applying 3D auto-regression encounters critical challenges of high computational demands on volumetric grids and ambiguous auto-regressive order along grid dimensions, resulting in inferior quality of 3D shapes. To this end, we then present a novel framework Argus3D in terms of capacity. Concretely, our approach introduces discrete representation learning based on a latent vector instead of volumetric grids, which not only reduces computational costs but also preserves essential geometric details by learning the joint distributions in a more tractable order. The capacity of conditional generation can thus be realized by simply concatenating various conditioning inputs to the latent vector, such as point clouds, categories, images, and texts. In addition, thanks to the simplicity of our model architecture, we naturally scale up our approach to a larger model with an impressive 3.6 billion parameters, further enhancing the quality of versatile 3D generation.

Extensive experiments on four generation tasks demonstrate that Argus3D can synthesize diverse and faithful shapes across multiple categories, achieving remarkable performance.